Why this comparison

With so many capable AIs out there, the “best” model depends on what you’re building and how you like to work. This post gives you a practical, no‑hype way to pick the right tool for coding, game prototyping, research, creative work, and privacy‑sensitive tasks. Think of it as a field guide you can revisit as your projects evolve.

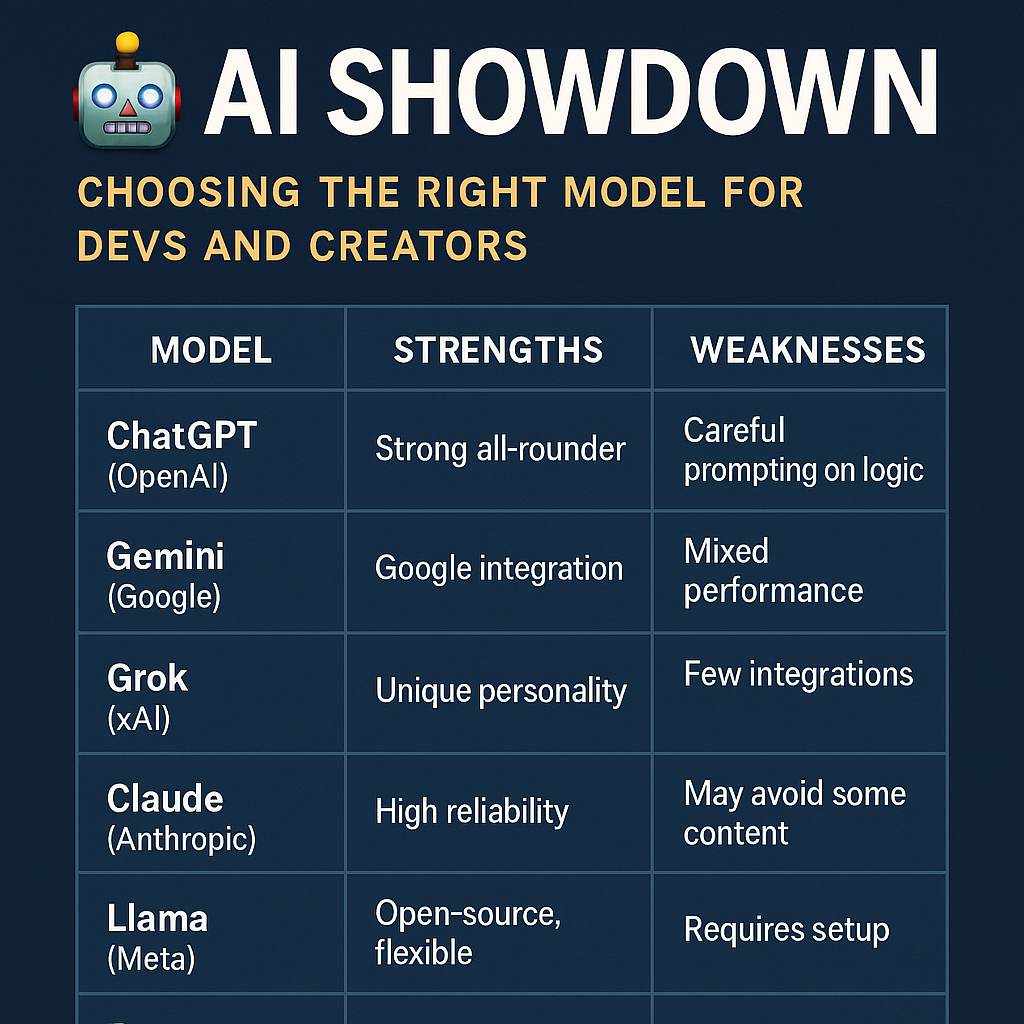

Quick comparison

| Model family | Where it shines | Trade‑offs | Great for |

|---|---|---|---|

| OpenAI ChatGPT (e.g., GPT‑4 class) | Strong all‑rounder for reasoning, coding assistance, and creative drafting | May require careful prompting on multi‑step logic and long toolchains | General use, mixed creative + technical work |

| Google Gemini (e.g., 1.5 Pro/Flash) | Integration with Google Workspace; good at understanding long inputs | Results vary across tasks; best inside Google ecosystem | Docs, Sheets, Gmail workflows, research summaries |

| Anthropic Claude 3 family | Reliable long‑form writing and careful reasoning; helpful, balanced tone | Conservative with risky outputs; slower when asked for exhaustive detail | Mission‑critical drafts, documentation, analysis |

| Meta Llama 3 (open models) | Local/hosted flexibility; good community tooling and fine‑tuning options | Requires setup; quality depends on model size and serving | Private deployments, customization, on‑device experiments |

| Mistral (e.g., Mistral Large, open variants) | Lean, efficient models; solid coding and multilingual support | Open variants need guardrails; large tasks may need orchestration | APIs on a budget, multilingual apps, OSS pipelines |

| Qwen (Alibaba) | Strong coder variants and cost efficiency | Quality varies by checkpoint; careful evaluation recommended | Rapid prototyping, batch coding tasks |

Which AI for which task

- Web/app scaffolding: Use a generalist (ChatGPT/Claude) to plan architecture, then iterate with a coder‑leaning model (Qwen/Mistral) for speed.

- Game prototyping: Generalist for design docs and loop logic; coder model to generate spritesheet utilities, collision helpers, and entity systems.

- Explaining unfamiliar code: Claude/ChatGPT tend to produce clearer, structured walkthroughs with risks and edge cases called out.

- Long research and documentation: Claude/Gemini handle long context well and keep tone consistent across sections.

- Productivity inside Google: Gemini pairs naturally with Docs/Sheets/Gmail and can streamline content pipelines.

- Privacy‑sensitive work: Prefer self‑hosted open models (Llama/Mistral/Qwen) or vendor settings that limit data retention.

Mini reviews

OpenAI ChatGPT (GPT‑4 class)

Strengths: Balanced reasoning, clean code suggestions, and strong editing for tone and style. Great at blending creative with technical tasks.

Watch‑outs: For multi‑file projects, ask it to produce a plan, file tree, and test list before code. Use follow‑ups to enforce constraints.

Best fit: One‑stop shop for devs and creators who need reliable drafting plus code.

Google Gemini (1.5 Pro/Flash)

Strengths: Handles long inputs well and plays nicely with Google tools. Good for summarization and organization.

Watch‑outs: For complex reasoning chains, add explicit step checks and verifications.

Best fit: Teams already living in Docs/Sheets who want smoother workflows.

Anthropic Claude 3 family

Strengths: High signal‑to‑noise in long writing, careful with claims, and helpful at outlining and refining requirements.

Watch‑outs: May avoid speculative content; provide clear permissions and boundaries for creative tasks.

Best fit: Documentation, research summaries, specs, and careful refactors.

Meta Llama 3

Strengths: Open weights enable local runs, customization, and private deployments; active ecosystem.

Watch‑outs: Quality depends on model size, fine‑tuning, and serving stack; needs MLOps care.

Best fit: Builders who need control, privacy, or offline capability.

Mistral

Strengths: Efficient inference, solid code generation, and OSS‑friendly licensing on some variants.

Watch‑outs: Add guardrails and evals for production; consider routing harder tasks to a stronger model.

Best fit: Cost‑sensitive APIs, multilingual apps, and lightweight services.

Qwen (coder‑leaning)

Strengths: Fast code output, helpful for scaffolding, regex, and boilerplate.

Watch‑outs: Review carefully for edge cases and integration pitfalls; pair with tests.

Best fit: Rapid prototyping and batch transformations.

Decision guide

| Goal | Good pick | Tip |

|---|---|---|

| Highest reliability in long writing | Claude family | Have it outline → draft → self‑critique → revise |

| Balanced creative + code | ChatGPT | Ask for a plan and tests before implementation |

| Deep Google workflows | Gemini | Use structured prompts with headings and checklists |

| Privacy and control | Llama/Mistral/Qwen (self‑hosted) | Keep data local; add retrieval and eval harness |

| Fast, budget coding | Qwen/Mistral | Enforce linting and unit tests on every snippet |

Prompt patterns that work

Spec → Plan → Build: “You are a senior engineer. First produce a bullet plan and file tree, then wait for approval.”

Constrained output: “Return only a JSON object matching this schema … If uncertain, set reason field.”

Self‑check: “List 5 failure modes or edge cases for the above solution and how you’d test them.”

Refactor safely: “Explain what this code does in plain English, then propose a minimal refactor with tests.”

Pricing and access notes

Most providers use usage‑based pricing (tokens/characters) with free or limited tiers. Open models can reduce variable costs but introduce hosting and maintenance overhead. For team projects, consider a hybrid: a reliable generalist for specs and reviews, plus a fast coder model for bulk generation.

Final take

You don’t need the “one best” AI—you need the right mix for your workflow. Start with a generalist you trust, add a coder model for speed, and keep an open model in your toolbox for privacy or customization. Re‑evaluate quarterly as your needs and the model landscape change.

Comments

Leave a Comment